Production-Grade Generative AI in Enterprise Software

Generative AI is rapidly becoming a core capability in enterprise software. Organizations are moving beyond experiments to embed large language models into products, applications, and internal platforms. Gartner states that by 2026, over 80 percent of enterprises will have deployed generative AI APIs or applications, compared to less than 5 percent in 2023. As adoption accelerates, the real challenge is no longer access to models but building production-ready systems that are secure, scalable, and reliable. This requires disciplined architecture, strong data engineering foundations, and operational controls designed for enterprise environments.

Most enterprise GenAI initiatives begin with controlled pilots. These pilots typically rely on static prompts, limited datasets, and a narrow set of users. While such implementations demonstrate potential, they fail to account for the realities of production environments.

In production, systems must handle unpredictable user behavior, concurrent requests, fluctuating traffic patterns, and evolving enterprise data. Latency expectations become stricter, costs scale exponentially, and failure modes multiply. Without architectural guardrails, small inefficiencies cascade into reliability and governance risks.

Another common failure point is embedding logic directly into prompts. Prompt-centric designs are fragile. They are difficult to version, hard to test, and nearly impossible to audit. Enterprises quickly discover that experimentation success does not translate into operational reliability.

Production-grade Generative AI systems prioritize predictability over novelty. They are designed to operate as dependable enterprise services rather than experimental features.

Key defining attributes include:

Enterprise systems must explain not only what an AI generated, but also why and from which data sources. This requirement fundamentally differentiates enterprise GenAI from consumer applications.

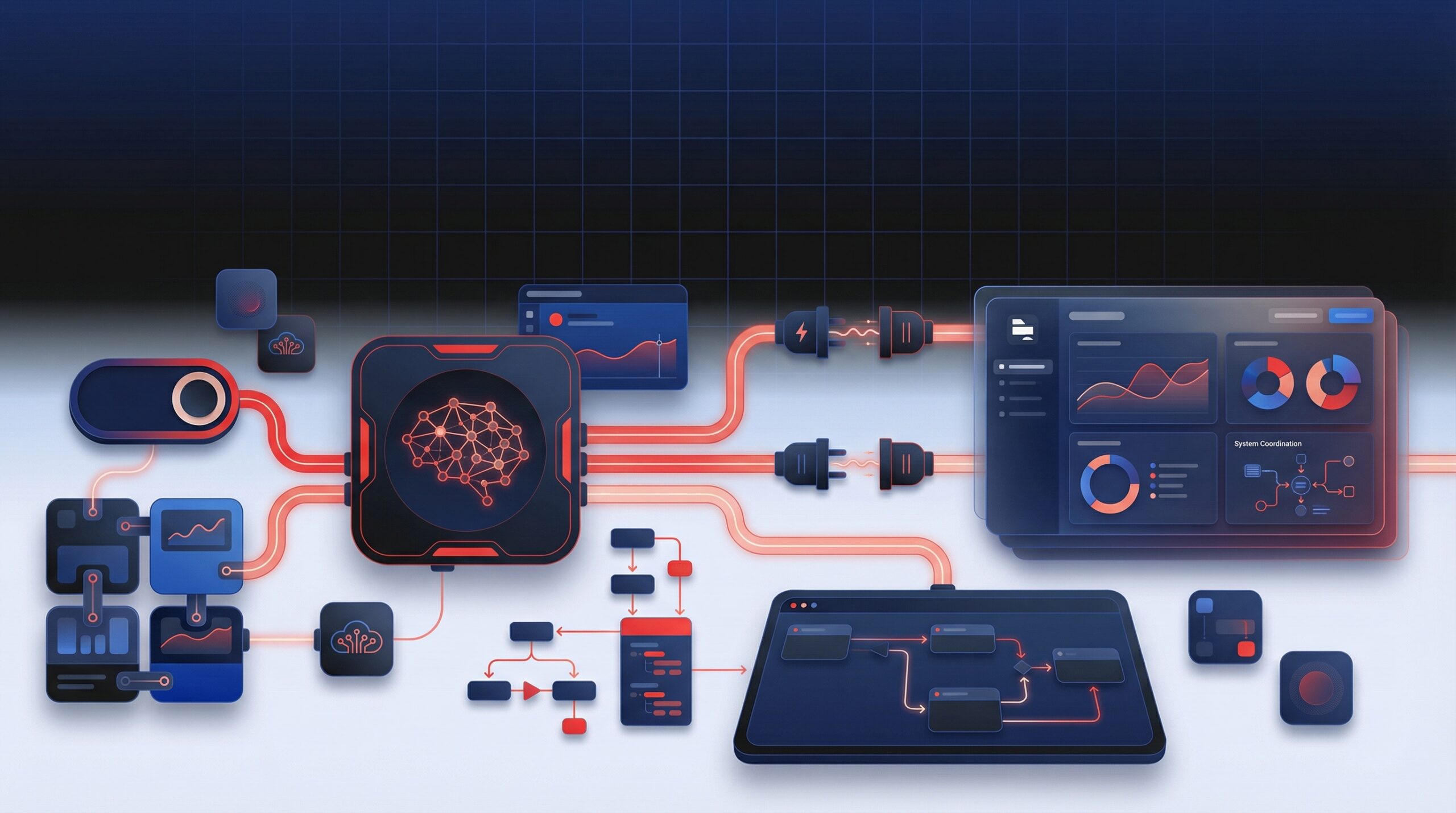

A scalable GenAI architecture separates responsibility across clearly defined layers.

User Interaction Layer

Interfaces embedded within enterprise applications capture intent and enforce input validation. This layer prevents malformed or malicious inputs from reaching the model.

Orchestration and Control Layer

This layer manages prompt templates, contextual assembly, routing logic, and guardrails. It ensures consistency and enforces governance policies centrally.

Inference Layer

Models are invoked through managed APIs or internal inference clusters. Version pinning and controlled rollout strategies prevent unexpected behavior changes.

Integration Layer

Enterprise systems such as CRMs, ERPs, data warehouses, and document repositories are accessed through secured connectors.

This modular design allows teams to evolve each layer independently.

Enterprises cannot rely on model knowledge frozen at training time. Business data is dynamic, regulated, and proprietary.

Retrieval-Augmented Generation addresses this by injecting verified enterprise context into every request. Instead of generating responses from probabilistic memory, the model reasons over retrieved documents and structured records.

Enterprise use cases

RAG significantly reduces hallucinations and aligns outputs with enterprise truth.

High-quality outputs depend on disciplined data engineering.

Enterprise GenAI platforms require pipelines that ingest structured records, unstructured documents, logs, and events. Data must be cleaned, classified, enriched with metadata, and versioned to ensure consistency.

Embedding strategies must align with data types and retrieval use cases. Chunking decisions directly impact recall and precision. Without proper data lifecycle management, retrieval quality degrades rapidly. Read – Why Data Engineering Services Are the Backbone of Digital Transformation

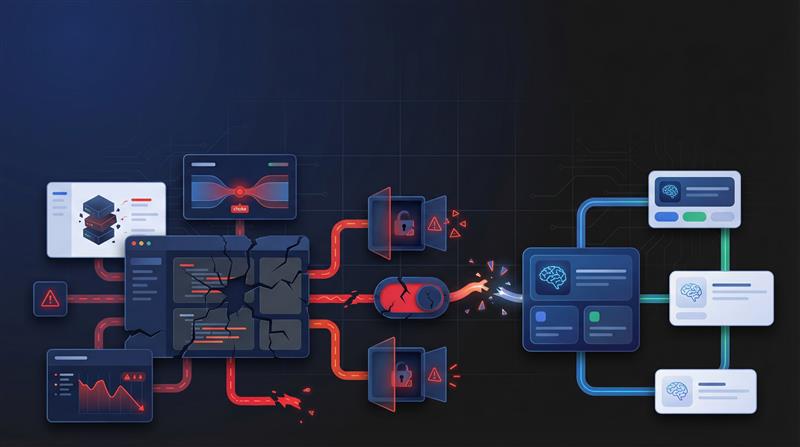

Security risks in GenAI systems extend beyond traditional application threats.

Key controls include

Compliance requirements demand full audit trails. Every request, retrieval, and response must be traceable for regulatory review.

Generative AI systems are dynamic, they learn, adapt, and rely on constantly changing data. To ensure reliability in production, enterprises need robust MLOps practices tailored for GenAI.

Key considerations include:

Without disciplined lifecycle management, GenAI platforms can quickly become brittle, unpredictable, and difficult to maintain. A structured MLOps approach ensures systems evolve safely, remain reliable, and continue delivering measurable business value.

Implementing Generative AI in an enterprise is not just about adopting the latest models, it requires a structured, phased approach to balance innovation with risk, scalability, and performance. Here’s a deeper dive into each phase:

This phased approach allows enterprises to innovate rapidly with Generative AI while maintaining control over risk, compliance, and cost. By starting small, building technical foundations, and scaling systematically, organizations can maximize impact without compromising security or reliability.

Measuring the success of enterprise Generative AI is about more than technical performance, it’s about connecting AI outputs to business impact. The right metrics ensure teams can make informed decisions, optimize resources, and scale solutions confidently.

Cost per Request and per User

Grounding Accuracy and Relevance

Latency Under Peak Load

Adoption and Workflow Completion Rates

Additional Metrics to Consider

By combining these technical and business-focused metrics, enterprises can continuously optimize Generative AI deployments. Metrics not only evaluate current performance but also guide prioritization, resource allocation, and strategic investment decisions ensuring that AI initiatives deliver measurable value.

Generative AI can transform enterprise software but only when built for production. Secure architecture, reliable data pipelines, and phased scaling turn experimentation into impact. AcmeMinds helps enterprises deploy GenAI that’s scalable, compliant, and business-ready. Learn more in our practical guide for product teams on integrating AI features in modern applications.

Generative AI becomes enterprise-ready when it is built on secure architecture, supported by reliable data pipelines, enforced with governance controls, and continuously monitored in production. These foundations ensure scalability, compliance, and dependable performance.

Retrieval-Augmented Generation grounds AI responses in verified enterprise data sources. This approach reduces hallucinations, improves accuracy, and significantly lowers compliance and regulatory risks in business-critical applications.

Enterprises manage GenAI costs through token optimization, intelligent caching, and workload-aware model selection. These techniques help balance performance and accuracy while keeping inference and operational expenses predictable.

GenAI platforms are typically owned by platform engineering or data engineering teams. Close collaboration with security teams ensures strong access controls, compliance alignment, and safe enterprise-wide adoption.

Unlike traditional machine learning, Generative AI requires continuous context management and output governance. Beyond training models, teams must manage prompts, retrieved data, and generated outputs in real time.

Yes, GenAI systems can be audited when designed with comprehensive logging, traceability, and version control. These capabilities enable accountability, compliance reporting, and post-incident analysis.